PyTorch Course

Introduction

This is a brief look into PyTorch from here. It is the most popular deep learning research framework

Vectors

General

So I just love terms, never remember them and spend my life watching YouTube to find out oh they meant that. Well for vectors they first went on about point on an x,y co-ordinate system where can point can be represented by an x,y points. Where x is along the horizon and y is not. For vectors we can calculate how many steps we go

- left or right

- up or down

We express a vector in square brackets and the

Like point they direction can be negative meaning right to left if the

The above is a two dimensional vector we can of course have three dimension with the z direction

Scaler Operation

Scaler operation is when you either multiply or divide a vector.

Scalar Multiplication

Multiplying a vector by a scalar stretches or shrinks its length.

Scalar Division

Dividing a vector by a scalar reduces its magnitude.

Vector Addition

To add two vectors, simply add their components:

Vector Length (Magnitude)

The magnitude of a vector is:

For example, if , then:

Basis Vectors

In 2D space, the standard basis vectors are:

Any vector can be written as a combination of these:

For example, if , then:

X-Component

When the vector is like this the x-component = the x value

But when we have (which I do not understand)

It is not

Y-Component

This is the same by with y

Creating Tensors

Here is a list of common types in pyTorch

| Type | What is it | Dimensions | PyTorch Representation | Typical Casing |

|---|---|---|---|---|

| Scalar | A single value with magnitude only | 0D | torch.tensor(7) |

Lowercase (e.g. ) |

| Vector | Ordered components with direction | 1D | torch.tensor([5, 3]) |

Lowercase (e.g. ) |

| Matrix | 2D array for transformations | 2D | torch.tensor([[1, 2], [3, 4]]) |

Uppercase (e.g. ) |

| Tensor | Generalized nD array | 3D+ | torch.randn(2, 3, 4) |

Uppercase (e.g. ) |

Getting Started

Most of the video for the second section centered on following these steps

- Import stuff e.g. pytorch, matplotlib

- Create a dataset

- Split data between testing and training data

- Build a model by subclassing off nn.Module

- Create loss function (something that measures between input data and expected)

- Create an optimizer function (something that changes the parameters to improve the outcome)

- Create a training loop

- Create a testing loop

- Make predication from the test data

- Plot training vs test

Using Scripts from GitHub

Liked this bit as it is just plain useful and here so I do not forget

import requests

from pathlib import Path

# Download helper functions from Learn PyTorch repo (if not already downloaded)

if Path("helper_functions.py").is_file():

print("helper_functions.py already exists, skipping download")

else:

print("Downloading helper_functions.py")

# Note: you need the "raw" GitHub URL for this to work

request = requests.get("https://raw.githubusercontent.com/mrdbourke/pytorch-deep-learning/main/helper_functions.py")

with open("helper_functions.py", "wb") as f:

f.write(request.content)

Training Loop

Here is the main approach to train loop in PyTorch

Testing Loop

Binary and Mult-Classifications

The workflow are the same but there were differences and this table probably sums them up prettry nicely

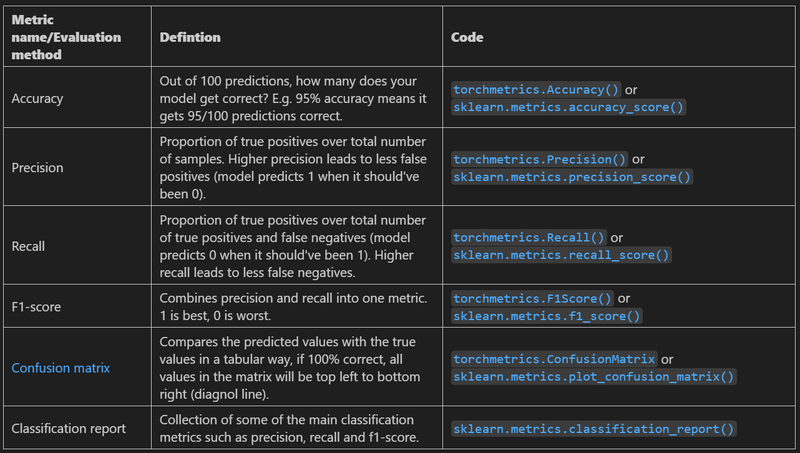

Classifications Metrics

Again a really useful slide for Daniel Bourke. He has been a joy to watch and with a 24 hours course that is saying something.

Summary of Classification and Workflow

So having got through the first 14 hours I guess the things that I took away way.

Lining up the Input/Output Data Shape

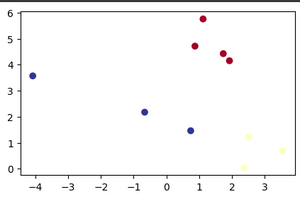

In the example we used the blob type from the sklearn library. Using this will produce 3 different sets of data points

import matplotlib.pyplot as plt

from sklearn.datasets import make_blobs

X1, y1 = make_blobs(n_samples=10, centers=3, n_features=2, random_state=0)

print(X1.shape)

print(X1)

print(y1)

Which gives

(10, 2) [[ 1.12031365 5.75806083] [ 1.7373078 4.42546234] [ 2.36833522 0.04356792] [ 0.87305123 4.71438583] [-0.66246781 2.17571724] [ 0.74285061 1.46351659] [-4.07989383 3.57150086] [ 3.54934659 0.6925054 ] [ 2.49913075 1.23133799] [ 1.9263585 4.15243012]] [0 0 1 0 2 2 2 1 1 0]

or

We can see in this instance each input type is a point so 2 numbers per sample and the output will be a single integer when determining which classification it belongs to. So when we build the model we will have

from torch import nn

NUM_CLASSES = 3

NUM_FEATURES = 2

RANDOM_SEED = 42

# Build model

class BlobModel(nn.Module):

def __init__(self, input_features, output_features, hidden_units=8):

"""Initializes all required hyperparameters for a multi-class classification model.

Args:

input_features (int): Number of input features to the model.

out_features (int): Number of output features of the model

(how many classes there are).

hidden_units (int): Number of hidden units between layers, default 8.

"""

super().__init__()

self.linear_layer_stack = nn.Sequential(

nn.Linear(in_features=input_features, out_features=hidden_units),

# nn.ReLU(), # <- does our dataset require non-linear layers? (try uncommenting and see if the results change)

nn.Linear(in_features=hidden_units, out_features=hidden_units),

# nn.ReLU(), # <- does our dataset require non-linear layers? (try uncommenting and see if the results change)

nn.Linear(in_features=hidden_units, out_features=output_features), # how many classes are there?

)

def forward(self, x):

return self.linear_layer_stack(x)

# Create an instance of BlobModel and send it to the target device

model_4 = BlobModel(input_features=NUM_FEATURES,

output_features=NUM_CLASSES,

hidden_units=8).to(device)

model_4

Also note that the output of layer is the input of the next layer and they must match

Ways to Improve Results

- Add more layers - more chances to learn patterns

- Add more hidden units - more features

- Fit/Run for longer - more epochs

- Change activation function - for binary usually sigmoid

- Change leaning rate i.e. the lr in our optimizer

Classification Conversion of results

There was a bit of time showing conversion of logits to prediction labels which went along these lines

logits -> prediction probabilities -> prediction labels

For sigmond (binary classification)

test_pred = torch.round(torch.sigmoid(test_logits))

For softmax (mult-classification)

test_pred = torch.softmax(test_logits, dim=1).argmax(dim=1)

Visualization

Really like the amount of plotting (no pun). For me, this makes it far easier to understand

# 3. Split into train and test sets

X_blob_train, X_blob_test, y_blob_train, y_blob_test = train_test_split(X_blob,

y_blob,

test_size=0.2,

random_state=RANDOM_SEED

)

# 4. Plot data

plt.figure(figsize=(10, 7))

plt.scatter(X_blob[:, 0], X_blob[:, 1], c=y_blob, cmap=plt.cm.RdYlBu);

Computer Vision

Getting Data

The getting of data was touch upon and that you need to examine the shape of the data. The image data format can change to set to set. E.g. Grayscale, Channel may come before width and height of after. There are helpers in well known datasets in Pytorch

# Setup training data

train_data = datasets.FashionMNIST(

root="data", # where to download data to?

train=True, # get training data

download=True, # download data if it doesn't exist on disk

transform=ToTensor(), # images come as PIL format, we want to turn into Torch tensors

target_transform=None # you can transform labels as well

)

# Setup testing data

test_data = datasets.FashionMNIST(

root="data",

train=False, # get test data

download=True,

transform=ToTensor()

)

Mini Batching

The Data Loader is capable of dividing the data into batches for processing. Another important part of this is to shuffle the training data to avoid data which made be loaded in a particular order. This changes the training and testing loops slightly. The loop is before, not for every image, but for every batch.

Formatting of Data

The concept on flattening was introduced. Which is where the pixel data was combined from width x height to one value.

Convolutional Neural Network

Introduction

This was all relatively simple but now we are on CNN - not the news channel. Fortunately people put a lot of effort in to make this easier for idiots like me. I particular like https://poloclub.github.io/cnn-explainer/?ref=mrdbourke.com

Building a model

I need visuals to get going and this course has helped me tremendously. Before I provide Daniels code here is the cnn explained screen shot

Now the code.

# Create a convolutional neural network

class FashionMNISTModelV2(nn.Module):

"""

Model architecture copying TinyVGG from:

https://poloclub.github.io/cnn-explainer/

"""

def __init__(self, input_shape: int, hidden_units: int, output_shape: int):

super().__init__()

self.block_1 = nn.Sequential(

nn.Conv2d(in_channels=input_shape,

out_channels=hidden_units,

kernel_size=3, # how big is the square that's going over the image?

stride=1, # default

padding=1),# options = "valid" (no padding) or "same" (output has same shape as input) or int for specific number

nn.ReLU(),

nn.Conv2d(in_channels=hidden_units,

out_channels=hidden_units,

kernel_size=3,

stride=1,

padding=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2,

stride=2) # default stride value is same as kernel_size

)

self.block_2 = nn.Sequential(

nn.Conv2d(hidden_units, hidden_units, 3, padding=1),

nn.ReLU(),

nn.Conv2d(hidden_units, hidden_units, 3, padding=1),

nn.ReLU(),

nn.MaxPool2d(2)

)

self.classifier = nn.Sequential(

nn.Flatten(),

# Where did this in_features shape come from?

# It's because each layer of our network compresses and changes the shape of our input data.

nn.Linear(in_features=hidden_units*7*7,

out_features=output_shape)

)

def forward(self, x: torch.Tensor):

x = self.block_1(x)

# print(x.shape)

x = self.block_2(x)

# print(x.shape)

x = self.classifier(x)

# print(x.shape)

return x

torch.manual_seed(42)

model_2 = FashionMNISTModelV2(input_shape=1,

hidden_units=10,

output_shape=len(class_names)).to(device)

model_2

You can see the model has 2 blocks just like the picture. Here is the first block on the picture

conv_1_1 relu_1_1 conv_1_2 relu_1_2 max_pool_1

The last layer is know as the classifier layer. For the input parameters we are slightly different to the picture a we are using grayscale and they are using color so our input shape is 1 rather than 3 which you can see on the top right of the picture 64x64 with 3 channels. The 10 hidden units is like the picture because if you count downwards there a 10 parts to each layers processing. Finally the output shape is the number of classifier labels there are.

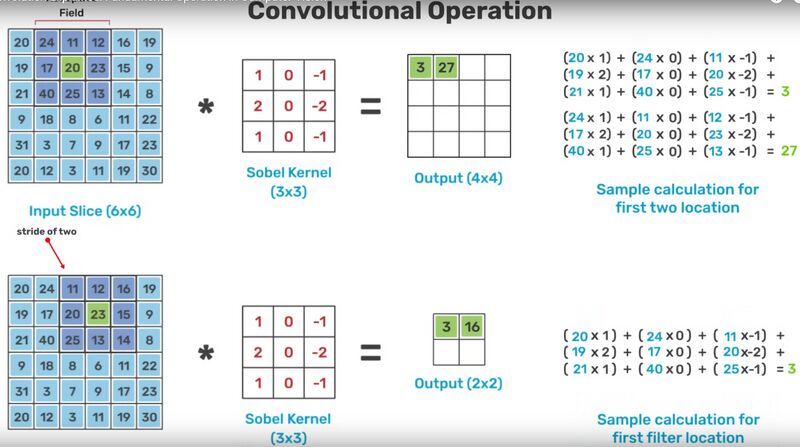

So a bit about the hyperparameters and 2D Convolutional Operations

- Kernel Size - The size of the kernel derrr

- Stride - The number of spaces the kernel moves at a time

- Padding - I am assuming that this is space to put around the kernel to ensure all of the data is processed

I like how you can see how the result is calculated. I looked up a Sobel Kernel and it said Edge detection—especially highlighting intensity changes along horizontal or vertical directions. However for Conv2d parameters for the kernel are determined by the CNN during processing.

For the max_pool layer, which has a 2x2 kernel, the input is reduced by a quarter my taking the highest value of each 4 pixel set.

To lastly, when building the classifier layer, Daniel stressed it is not easy to know the in_features shape.

self.classifier = nn.Sequential(

nn.Flatten(),

# Where did this in_features shape come from?

# It's because each layer of our network compresses and changes the shape of our input data.

nn.Linear(in_features=hidden_units*7*7,

out_features=output_shape)

)

To help calculate this Daniel put an image through the layers separately and printed the shapes. In this case the final output from block_2 was 1,10,7,7. In this case when the in_features=hidden_units (no 7*7) the error is

RuntimeError: mat1 and mat2 shapes cannot be multiplied (1x490 and 10x10).

The first number is their shape, the second number is ours. So 10x10 comes from the hidden units=10 and the 10 from the output of block_2. We see that the output is flattened. Which means 10,7,7 is combined i.e. 10x7x7 = 490. So working back before flattening the we have 10x7x7

Functioning Training, Test and Running

We eventually put these loops into reusable functions. This will be different based on you needs but an example hear to remind me

Functioning Training Loop

def train_step(model: torch.nn.Module,

data_loader: torch.utils.data.DataLoader,

loss_fn: torch.nn.Module,

optimizer: torch.optim.Optimizer,

accuracy_fn,

device: torch.device = device):

train_loss, train_acc = 0, 0

model.to(device)

model.train() # Enable training mode (e.g. dropout, batchnorm)

for batch, (X, y) in enumerate(data_loader):

X, y = X.to(device), y.to(device)

# 1. Forward pass

y_pred = model(X).squeeze() # Shape: (batch_size,)

# 2. Calculate loss

loss = loss_fn(y_pred, y)

train_loss += loss.item()

# 3. Calculate accuracy

pred_labels = (torch.sigmoid(y_pred) > 0.5).float()

train_acc += accuracy_fn(

y_true=y.view(-1).cpu().numpy(),

y_pred=pred_labels.view(-1).cpu().numpy()

)

# 4. Backpropagation

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 5. Average metrics

train_loss /= len(data_loader)

train_acc /= len(data_loader)

print(f"Train loss: {train_loss:.5f} | Train accuracy: {train_acc:.2f}%")

return train_loss, train_acc

Functioning Testing Loop

def test_step(data_loader: torch.utils.data.DataLoader,

model: torch.nn.Module,

loss_fn: torch.nn.Module,

accuracy_fn,

device: torch.device = device):

test_loss, test_acc = 0, 0

model.to(device)

model.eval() # Evaluation mode disables dropout, etc.

with torch.inference_mode(): # No gradients needed

for X, y in data_loader:

X, y = X.to(device), y.to(device)

# Forward pass

test_pred = model(X).squeeze() # Shape: (batch_size,)

# Loss

test_loss += loss_fn(test_pred.squeeze(), y).item()

# Prediction → binary threshold

pred_labels = (torch.sigmoid(test_pred) > 0.5).float()

# Accuracy

test_acc += accuracy_fn(

y_true=y.view(-1).cpu().numpy(),

y_pred=pred_labels.view(-1).cpu().numpy()

)

# Average metrics

test_loss /= len(data_loader)

test_acc /= len(data_loader)

print(f"Test loss: {test_loss:.5f} | Test accuracy: {test_acc:.2f}%\n")

return test_loss, test_acc

Running them Against the Model

torch.manual_seed(42)

# Measure time

from timeit import default_timer as timer

train_time_start_on_gpu = timer()

epochs = 3

for epoch in tqdm(range(epochs)):

print(f"Epoch: {epoch}\n---------")

train_step(data_loader=train_dataloader,

model=model_1,

loss_fn=loss_fn,

optimizer=optimizer,

accuracy_fn=accuracy_fn

)

test_step(data_loader=test_dataloader,

model=model_1,

loss_fn=loss_fn,

accuracy_fn=accuracy_fn

)

train_time_end_on_gpu = timer()

total_train_time_model_1 = print_train_time(start=train_time_start_on_gpu,

end=train_time_end_on_gpu,

device=device)

Installing Missing Packages in Colab

This is a quick not to show how to install missing packages

# See if torchmetrics exists, if not, install it

try:

import torchmetrics, mlxtend

print(f"mlxtend version: {mlxtend.__version__}")

assert int(mlxtend.__version__.split(".")[1]) >= 19, "mlxtend verison should be 0.19.0 or higher"

except:

!pip install -q torchmetrics -U mlxtend # <- Note: If you're using Google Colab, this may require restarting the runtime

import torchmetrics, mlxtend

print(f"mlxtend version: {mlxtend.__version__}")

More Functionalizing

So more code just for reference.

Getting Random Data

This time the code to get random images from test data

import random

random.seed(42)

test_samples = []

test_labels = []

for sample, label in random.sample(list(test_data), k=9):

test_samples.append(sample)

test_labels.append(label)

# View the first test sample shape and label

print(f"Test sample image shape: {test_samples[0].shape}\nTest sample label: {test_labels[0]} ({class_names[test_labels[0]]})")

Making Predictions

Again this will change with different requirements

def make_predictions(model: torch.nn.Module, data: list, device: torch.device = device):

pred_probs = []

model.eval()

with torch.inference_mode():

for sample in data:

# Prepare sample

sample = torch.unsqueeze(sample, dim=0).to(device) # Add an extra dimension and send sample to device

# Forward pass (model outputs raw logit)

pred_logit = model(sample)

# Get prediction probability (logit -> prediction probability)

pred_prob = torch.softmax(pred_logit.squeeze(), dim=0) # note: perform softmax on the "logits" dimension, not "batch" dimension (in this case we have a batch size of 1, so can perform on dim=0)

# Get pred_prob off GPU for further calculations

pred_probs.append(pred_prob.cpu())

# Stack the pred_probs to turn list into a tensor

return torch.stack(pred_probs)

Running Predictions

So here is how to use it

# Make predictions on test samples with model 2

pred_probs= make_predictions(model=model_2,

data=test_samples)

# Turn the prediction probabilities into prediction labels by taking the argmax()

pred_classes = pred_probs.argmax(dim=1)

pred_classes

Plotting

Really the main reason to copy large amounts of code was for this function. I am loving the plotting library and may taking a shine to python more these days

# Plot predictions

plt.figure(figsize=(9, 9))

nrows = 3

ncols = 3

for i, sample in enumerate(test_samples):

# Create a subplot

plt.subplot(nrows, ncols, i+1)

# Plot the target image

plt.imshow(sample.squeeze(), cmap="gray")

# Find the prediction label (in text form, e.g. "Sandal")

pred_label = class_names[pred_classes[i]]

# Get the truth label (in text form, e.g. "T-shirt")

truth_label = class_names[test_labels[i]]

# Create the title text of the plot

title_text = f"Pred: {pred_label} | Truth: {truth_label}"

# Check for equality and change title colour accordingly

if pred_label == truth_label:

plt.title(title_text, fontsize=10, c="g") # green text if correct

else:

plt.title(title_text, fontsize=10, c="r") # red text if wrong

plt.axis(False);

Confusion Matrix

So for once this was not new to me. I have built these when looking at breast density (a long long time ago before my stroke). The above is great to see but this with color makes this rock.

To make it is really easy using torch metrics

from torchmetrics import ConfusionMatrix

from mlxtend.plotting import plot_confusion_matrix

# 2. Setup confusion matrix instance and compare predictions to targets

confmat = ConfusionMatrix(num_classes=len(class_names), task='multiclass')

confmat_tensor = confmat(preds=y_pred_tensor,

target=test_data.targets)

# 3. Plot the confusion matrix

fig, ax = plot_confusion_matrix(

conf_mat=confmat_tensor.numpy(), # matplotlib likes working with NumPy

class_names=class_names, # turn the row and column labels into class names

figsize=(10, 7)

);

This produces this which makes it so easy to see where the problems are

Custom Datasets

What Follows

Quite rightly the steps below focus on the data at each step, checking the code does what it says on the tin. A fair bit of time is devoted to doing something, then checking it did it

Dataset Standard

There are standards people keep to for storing datasets. You separate test and train and store data in a directory named the classification it belongs to.

There are 2 directories and 0 images in 'data/pizza_steak_sushi'. There are 3 directories and 0 images in 'data/pizza_steak_sushi/train'. There are 0 directories and 75 images in 'data/pizza_steak_sushi/train/steak'. There are 0 directories and 78 images in 'data/pizza_steak_sushi/train/pizza'. There are 0 directories and 72 images in 'data/pizza_steak_sushi/train/sushi'. There are 3 directories and 0 images in 'data/pizza_steak_sushi/test'. There are 0 directories and 19 images in 'data/pizza_steak_sushi/test/steak'. There are 0 directories and 25 images in 'data/pizza_steak_sushi/test/pizza'. There are 0 directories and 31 images in 'data/pizza_steak_sushi/test/sushi'.

Dataset Transform

I did not image it necessary to flip the image but this flips them 50% of the time. They are also resized, which the robot says is necessary.

# Write transform for image

data_transform = transforms.Compose([

# Resize the images to 64x64

transforms.Resize(size=(64, 64)),

# Flip the images randomly on the horizontal

transforms.RandomHorizontalFlip(p=0.5), # p = probability of flip, 0.5 = 50% chance

# Turn the image into a torch.Tensor

transforms.ToTensor() # this also converts all pixel values from 0 to 255 to be between 0.0 and 1.0

])

I really did like the permute which allows you to shuffle the channels. Someone thought able the usage when they wrote that

def plot_transformed_images(image_paths, transform, n=3, seed=42):

random.seed(seed)

random_image_paths = random.sample(image_paths, k=n)

for image_path in random_image_paths:

with Image.open(image_path) as f:

fig, ax = plt.subplots(1, 2)

ax[0].imshow(f)

ax[0].set_title(f"Original \nSize: {f.size}")

ax[0].axis("off")

# Transform and plot image

# Note: permute() will change shape of image to suit matplotlib

# (PyTorch default is [C, H, W] but Matplotlib is [H, W, C])

transformed_image = transform(f).permute(1, 2, 0)

ax[1].imshow(transformed_image)

ax[1].set_title(f"Transformed \nSize: {transformed_image.shape}")

ax[1].axis("off")

fig.suptitle(f"Class: {image_path.parent.stem}", fontsize=16)

Using ImageFolder

This has functionality to load you images in and transform the data and the labels. There are lots of these to use but here is the example in the course

# Use ImageFolder to create dataset(s)

from torchvision import datasets

train_data = datasets.ImageFolder(root=train_dir, # target folder of images

transform=data_transform, # transforms to perform on data (images)

target_transform=None) # transforms to perform on labels (if necessary)

test_data = datasets.ImageFolder(root=test_dir,

transform=data_transform)

Writing Your Own ImageFolder

PyTorch provides a base class called Dataset. You need to implement _getitem()__. Lot of code but perhaps handy for any future use outside of the course.

import os

import pathlib

import torch

from PIL import Image

from torch.utils.data import Dataset

from torchvision import transforms

from typing import Tuple, Dict, List

# Write a custom dataset class (inherits from torch.utils.data.Dataset)

from torch.utils.data import Dataset

# 1. Subclass torch.utils.data.Dataset

class ImageFolderCustom(Dataset):

# 2. Initialize with a targ_dir and transform (optional) parameter

def __init__(self, targ_dir: str, transform=None) -> None:

# 3. Create class attributes

# Get all image paths

self.paths = list(pathlib.Path(targ_dir).glob("*/*.jpg")) # note: you'd have to update this if you've got .png's or .jpeg's

# Setup transforms

self.transform = transform

# Create classes and class_to_idx attributes

self.classes, self.class_to_idx = self._find_classes(targ_dir)

# 4. Make function to load images

def load_image(self, index: int) -> Image.Image:

"Opens an image via a path and returns it."

image_path = self.paths[index]

return Image.open(image_path)

# 5. Overwrite the __len__() method (optional but recommended for subclasses of torch.utils.data.Dataset)

def __len__(self) -> int:

"Returns the total number of samples."

return len(self.paths)

# 6. Overwrite the __getitem__() method (required for subclasses of torch.utils.data.Dataset)

def __getitem__(self, index: int) -> Tuple[torch.Tensor, int]:

"Returns one sample of data, data and label (X, y)."

img = self.load_image(index)

class_name = self.paths[index].parent.name # expects path in data_folder/class_name/image.jpeg

class_idx = self.class_to_idx[class_name]

# Transform if necessary

if self.transform:

return self.transform(img), class_idx # return data, label (X, y)

else:

return img, class_idx # return data, label (X, y)

# Make function to find classes in target directory

def _find_classes(self, directory: str) -> Tuple[List[str], Dict[str, int]]:

"""Finds the class folder names in a target directory.

Assumes target directory is in standard image classification format.

Args:

directory (str): target directory to load classnames from.

Returns:

Tuple[List[str], Dict[str, int]]: (list_of_class_names, dict(class_name: idx...))

Example:

find_classes("food_images/train")

>>> (["class_1", "class_2"], {"class_1": 0, ...})

"""

# 1. Get the class names by scanning the target directory

classes = sorted(entry.name for entry in os.scandir(directory) if entry.is_dir())

# 2. Raise an error if class names not found

if not classes:

raise FileNotFoundError(f"Couldn't find any classes in {directory}.")

# 3. Create a dictionary of index labels (computers prefer numerical rather than string labels)

class_to_idx = {cls_name: i for i, cls_name in enumerate(classes)}

return classes, class_to_idx

Data Augmentation

We can manipulate the data by rotating, zooming, flipping etc. This is done to allow the model to see the same data from different perspectives. This was shown to improve results considerably.

from torchvision import transforms

train_transforms = transforms.Compose([

transforms.Resize((224, 224)),

transforms.TrivialAugmentWide(num_magnitude_bins=31), # how intense

transforms.ToTensor() # use ToTensor() last to get everything between 0 & 1

])

torchinfo

Useful tool for a mere beginner like me. Here showing a TinyVGG

Plotting the Loss

I would imagine, aside from loading data accurately, knowing what good looks like is pretty, pretty key. So here is how Daniel plotted the loss. First the shape of the data

Epoch: 1 | train_loss: 1.1078 | train_acc: 0.2578 | test_loss: 1.1360 | test_acc: 0.2604 Epoch: 2 | train_loss: 1.0847 | train_acc: 0.4258 | test_loss: 1.1620 | test_acc: 0.1979 Epoch: 3 | train_loss: 1.1157 | train_acc: 0.2930 | test_loss: 1.1697 | test_acc: 0.1979 Epoch: 4 | train_loss: 1.0956 | train_acc: 0.4141 | test_loss: 1.1384 | test_acc: 0.1979 Epoch: 5 | train_loss: 1.0985 | train_acc: 0.2930 | test_loss: 1.1426 | test_acc: 0.1979

Now the code to plot it.

def plot_loss_curves(results: Dict[str, List[float]]):

"""Plots training curves of a results dictionary.

Args:

results (dict): dictionary containing list of values, e.g.

{"train_loss": [...],

"train_acc": [...],

"test_loss": [...],

"test_acc": [...]}

"""

# Get the loss values of the results dictionary (training and test)

loss = results['train_loss']

test_loss = results['test_loss']

# Get the accuracy values of the results dictionary (training and test)

accuracy = results['train_acc']

test_accuracy = results['test_acc']

# Figure out how many epochs there were

epochs = range(len(results['train_loss']))

# Setup a plot

plt.figure(figsize=(15, 7))

# Plot loss

plt.subplot(1, 2, 1)

plt.plot(epochs, loss, label='train_loss')

plt.plot(epochs, test_loss, label='test_loss')

plt.title('Loss')

plt.xlabel('Epochs')

plt.legend()

# Plot accuracy

plt.subplot(1, 2, 2)

plt.plot(epochs, accuracy, label='train_accuracy')

plt.plot(epochs, test_accuracy, label='test_accuracy')

plt.title('Accuracy')

plt.xlabel('Epochs')

plt.legend();

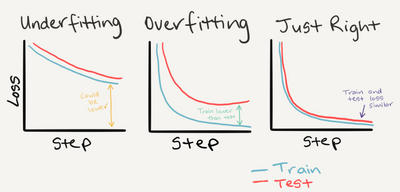

Understanding Loss Curves in Machine Learning

Here are three examples

Loss curves help visualize how well a model is learning over time. They typically show two lines:

- Training loss – how well the model fits the data it was trained on.

- Test loss – how well the model performs on unseen data.

Below are three common patterns:

Underfitting

- Description: Both training and test loss stay high and close together.

- What it means: The model isn’t learning enough from the data. It’s too simple or not trained long enough.

- Visual cue: Flat or slowly decreasing lines with little separation.

- Fixes: Try a more complex model, train longer, or improve feature quality.

Overfitting

- Description: Training loss drops sharply, but test loss stays high or increases.

- What it means: The model is memorizing the training data but failing to generalize.

- Visual cue: Wide gap between training and test loss.

- Fixes: Use regularization, simplify the model, or add more training data.

Just Right (Well-Fitted)

- Description: Both training and test loss decrease and stay close together.

- What it means: The model is learning effectively and generalizing well.

- Visual cue: Parallel curves with low loss and minimal gap.

- Goal: This is the ideal balance between bias and variance.

Fixing Underfitting in PyTorch

Underfitting occurs when a model fails to learn the underlying patterns in the data. It typically results in high training and test loss.

| Cause | Symptom | Remedy |

|---|---|---|

| Model too simple | High training and test loss | Use a deeper or wider neural network |

| Insufficient training | Loss plateaus early | Train for more epochs or use better learning rate scheduling |

| Poor feature representation | Low accuracy across datasets | Improve input features or use pretrained embeddings |

| Over-regularization | Loss remains high despite training | Reduce dropout, weight decay, or other regularization |

| Low learning rate | Slow convergence | Increase learning rate or use adaptive optimizers like Adam |

Fixing Overfitting in PyTorch

Overfitting happens when a model learns the training data too well but fails to generalize to new data. It shows low training loss and high test loss.

| Cause | Symptom | Remedy |

|---|---|---|

| Model too complex | Training loss much lower than test loss | Simplify the architecture or reduce parameters |

| Too few training samples | Poor generalization | Augment data or gather more samples |

| No regularization | Sharp drop in training loss | Add dropout, weight decay, or early stopping |

| High learning rate | Erratic loss behavior | Lower learning rate or use learning rate decay |

| Overtraining | Test loss increases after a point | Use early stopping or monitor validation metrics |

Summary

- Underfitting = model not learning enough → make it smarter or train longer.

- Overfitting = model learning too much from training → make it generalize better.

Using Your Own Custom Image

The problems you might get when using a custom image is.

- Wrong datatypes - our model expects `torch.float32` where our original custom image was `uint8`.

- Wrong device - our model was on the target `device` (in our case, the GPU) whereas our target data hadn't been moved to the target `device` yet.

- Wrong shapes - our model expected an input image of shape `[N, C, H, W]` or `[batch_size, color_channels, height, width]` whereas our custom image tensor was of shape `[color_channels, height, width]`.

Here is the all in one function to predict an image. Should make a good base for future pyTorch work.

def pred_and_plot_image(model: torch.nn.Module,

image_path: str,

class_names: List[str] = None,

transform=None,

device: torch.device = device):

"""Makes a prediction on a target image and plots the image with its prediction."""

# 1. Load in image and convert the tensor values to float32

target_image = torchvision.io.read_image(str(image_path)).type(torch.float32)

# 2. Divide the image pixel values by 255 to get them between [0, 1]

target_image = target_image / 255.

# 3. Transform if necessary

if transform:

target_image = transform(target_image)

# 4. Make sure the model is on the target device

model.to(device)

# 5. Turn on model evaluation mode and inference mode

model.eval()

with torch.inference_mode():

# Add an extra dimension to the image

target_image = target_image.unsqueeze(dim=0)

# Make a prediction on image with an extra dimension and send it to the target device

target_image_pred = model(target_image.to(device))

# 6. Convert logits -> prediction probabilities (using torch.softmax() for multi-class classification)

target_image_pred_probs = torch.softmax(target_image_pred, dim=1)

# 7. Convert prediction probabilities -> prediction labels

target_image_pred_label = torch.argmax(target_image_pred_probs, dim=1)

# 8. Plot the image alongside the prediction and prediction probability

plt.imshow(target_image.squeeze().permute(1, 2, 0)) # make sure it's the right size for matplotlib

if class_names:

title = f"Pred: {class_names[target_image_pred_label.cpu()]} | Prob: {target_image_pred_probs.max().cpu():.3f}"

else:

title = f"Pred: {target_image_pred_label} | Prob: {target_image_pred_probs.max().cpu():.3f}"

plt.title(title)

plt.axis(False);

Hidden Markov Model

Never came up in the PyTorch but did in the Tensorflow so want to provide a PyTorch HMM equivalent.

import torch

import torch.distributions as dist

# Initial state distribution

initial_probs = torch.tensor([0.2, 0.8])

initial_dist = dist.Categorical(probs=initial_probs)

# Transition matrix

transition_probs = torch.tensor([[0.5, 0.5],

[0.2, 0.8]])

# Emission distributions

observation_dists = [

dist.Normal(loc=0.0, scale=5.0),

dist.Normal(loc=15.0, scale=10.0)

]

# Prediction loop

num_steps = 7

states = []

predictions = []

# Sample initial state and emission

state = initial_dist.sample()

states.append(state.item())

predictions.append(observation_dists[state].sample().item())

# Sample transitions and emissions

for _ in range(1, num_steps):

state = dist.Categorical(probs=transition_probs[state]).sample()

states.append(state.item())

predictions.append(observation_dists[state].sample().item())

# Output

print("Hidden States:", states)

print("Predicted Observations:", predictions)