LLM and Ollama: Difference between revisions

| Line 192: | Line 192: | ||

*Get the Data | *Get the Data | ||

*Build a query tool | *Build a query tool | ||

Most the time was spent trying to find a working version of chromadb which turned out to be 0.6.1 | |||

===Get the Data=== | ===Get the Data=== | ||

So we get the data by | So we get the data by | ||

Revision as of 02:43, 6 May 2025

Introduction

This is a page about ollama and you guest it LLM. I have downloaded several models and got a UI going over them locally. The plan is to build something like Claude desktop in Typescript to Golang. First some theory in Python from here. Here is the problem I am trying to solve.

Using the Remote Ollama

You can connect by setting the host with

export OLLAMA_HOST=192.blah.blah.blah

Now you can use with

ollama run llama3.2:latest

Taking lamma3.2b as an example

Model Info

- Architecture:llama Who made it

- Parameters:3.2B - Means 3.2 billion parameters (bigger requires more resources)

- Context Length:131072 - Number of tokens it can injest

- Embedding Length:3072 - Size of the vector for each token in the input text

- Quantization:Q4_K_M - Too complex to explain

You can customize the mode with a Modelfile and running create with ollama. For example

FROM llama3.2

# set the temperature where higher is more creative

PARAMETER temperature 0.3

SYSTEM """

You are Bill, a very smart assistant who answers questions succintly and informatively

"""Now we can create a copy with

ollama create bill -f ./ModelfileRest API Interaction

So we can send questions to llama using the the rest endpoint to 11434

curl http://192.blah.blah.blah:11434/api/generate -d '{

"model": "llama3.2",

"prompt": "Why is the sky blue?",

"stream": false

}'

We can chat by changing the endpoint and the format by adding format in the playload

curl http://192.blah.blah.blah:11434/api/chat -d '{

"model": "llama3.2",

"prompt": "Why is the sky blue?",

"stream": false,

"format": "json"

}'

All of the options are at here

UI Based Client Msty

Seems that Msty was a good choice. You specify a provide and you can then put in 192.blah.blah.blah:11434. It support deepseek and other provider too.

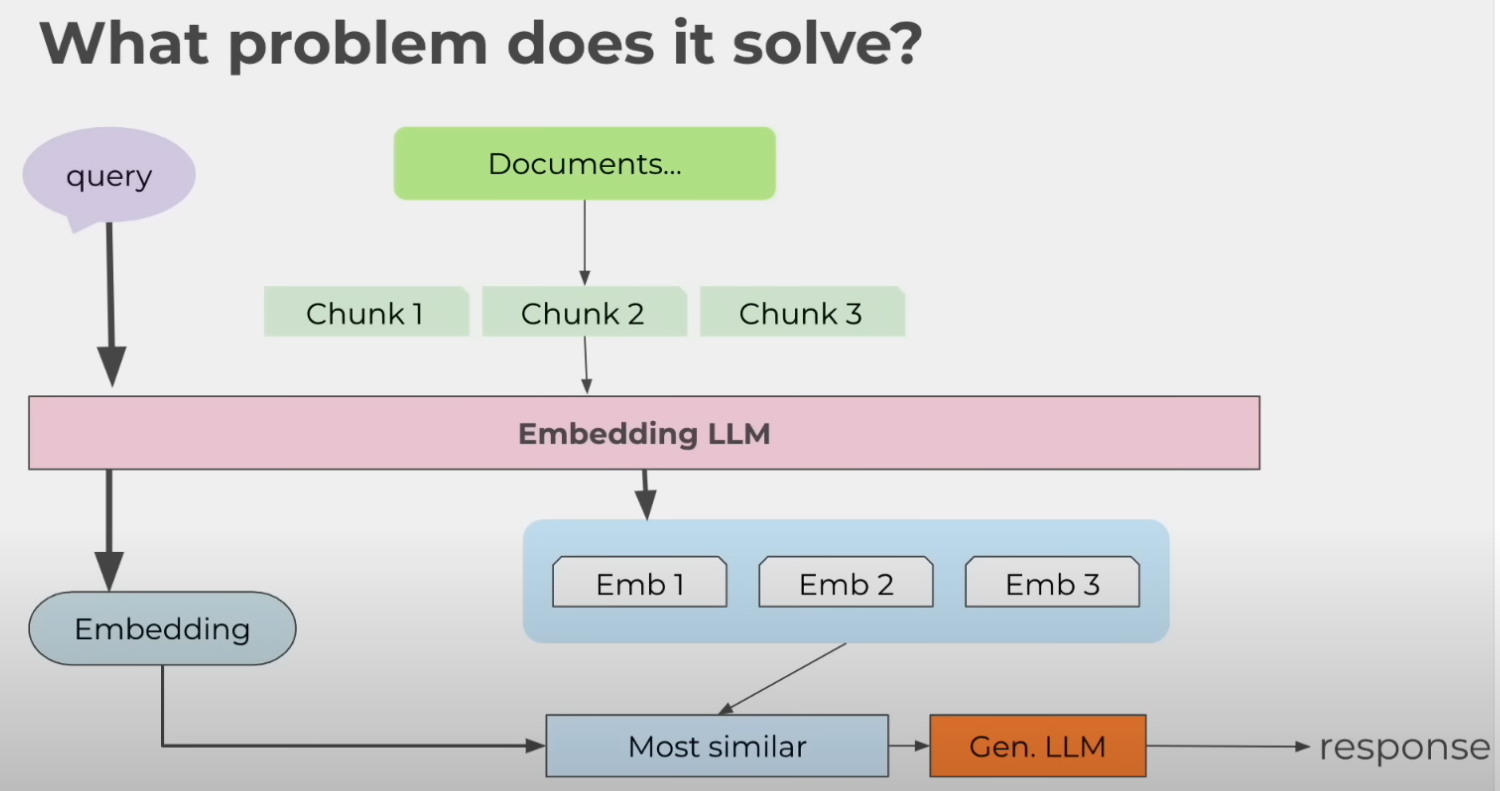

RAG Retrieval-Augmented Generation

This allows us to converse with out own documents/data and solves the bizarre statement LLMs produce

- LLM

- Document Corpus (Knowledge Base)

- Document Embeddings

- Vecto Store (Vector DB, Faiss, Pinecone, Chromadb)

- Retrieval Mechanism

LangChain is a tool to make this easier

- Loading and parsing documents

- Splitting documents

- Generating embeddings

- Provides a unified abstraction for working with LLMs and Apps

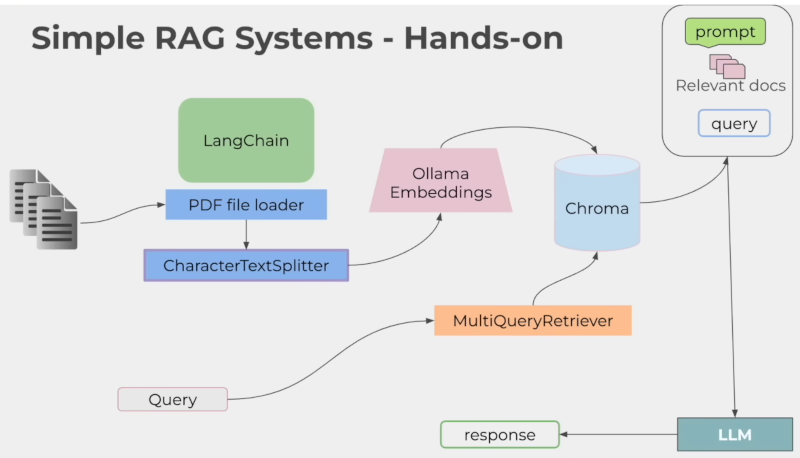

This is referred to as a simple RAG system. We shall see

Example 1

This was a bit of strange experience. The code is at here but really the thing consisted of the boxes in the diagram.

##

# 1. Ingest PDF Files

# 2. Extract Text from PDF Files and split into small chunks

# 3. Send the chunks to the embedding model

# 4. Save the embeddings to a vector database

# 5. Perform similarity search on the vector database to find similar documents

# 6. retrieve the similar documents and present them to the user

## run pip install -r requirements.txt to install the required packages

from langchain_community.document_loaders import UnstructuredPDFLoader

from langchain_community.document_loaders import OnlinePDFLoader

doc_path = "./data/BOI.pdf"

model = "llama3.2"

# Local PDF file uploads

if doc_path:

loader = UnstructuredPDFLoader(file_path=doc_path)

data = loader.load()

print("done loading....")

else:

print("Upload a PDF file")

# Preview first page

content = data[0].page_content

# print(content[:100])

# ==== End of PDF Ingestion ====

# ==== Extract Text from PDF Files and Split into Small Chunks ====

from langchain_ollama import OllamaEmbeddings

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain_community.vectorstores import Chroma

# Split and chunk

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1200, chunk_overlap=300)

chunks = text_splitter.split_documents(data)

print("done splitting....")

# print(f"Number of chunks: {len(chunks)}")

# print(f"Example chunk: {chunks[0]}")

# ===== Add to vector database ===

import ollama

ollama.pull("nomic-embed-text")

vector_db = Chroma.from_documents(

documents=chunks,

embedding=OllamaEmbeddings(model="nomic-embed-text"),

collection_name="simple-rag",

)

print("done adding to vector database....")

## === Retrieval ===

from langchain.prompts import ChatPromptTemplate, PromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain_ollama import ChatOllama

from langchain_core.runnables import RunnablePassthrough

from langchain.retrievers.multi_query import MultiQueryRetriever

# set up our model to use

llm = ChatOllama(model=model)

# a simple technique to generate multiple questions from a single question and then retrieve documents

# based on those questions, getting the best of both worlds.

QUERY_PROMPT = PromptTemplate(

input_variables=["question"],

template="""You are an AI language model assistant. Your task is to generate five

different versions of the given user question to retrieve relevant documents from

a vector database. By generating multiple perspectives on the user question, your

goal is to help the user overcome some of the limitations of the distance-based

similarity search. Provide these alternative questions separated by newlines.

Original question: {question}""",

)

retriever = MultiQueryRetriever.from_llm(

vector_db.as_retriever(), llm, prompt=QUERY_PROMPT

)

# RAG prompt

template = """Answer the question based ONLY on the following context:

{context}

Question: {question}

"""

prompt = ChatPromptTemplate.from_template(template)

chain = (

{"context": retriever, "question": RunnablePassthrough()}

| prompt

| llm

| StrOutputParser()

)

# res = chain.invoke(input=("what is the document about?",))

# res = chain.invoke(

# input=("what are the main points as a business owner I should be aware of?",)

# )

res = chain.invoke(input=("how to report BOI?",))

print(res)

I did like the streamlit ui version.

Example 2

Spent a bit more time unpicking this and now have a better understanding. This time around it consists of two parts

- Get the Data

- Build a query tool

Most the time was spent trying to find a working version of chromadb which turned out to be 0.6.1

Get the Data

So we get the data by

- Build a list of URLs

- Build documents from the URLs

- Split the data into chunks

- Persist the Collected Data

"""Generate unique id for ChromaDB."""

import time

import uuid

import chromadb

from langchain_community.document_loaders import WebBaseLoader

from langchain_text_splitters import CharacterTextSplitter

import ollama

def load_data() -> list:

"""

Load data from the specified URLs using WebBaseLoader.

"""

urls = ["https://angular.love/angular-19-whats-new"]

loader = WebBaseLoader(urls)

loaded_documents = loader.load()

return loaded_documents

text_splitter = CharacterTextSplitter(

chunk_size=3400,

chunk_overlap=300,

is_separator_regex=False,

)

COLLECTION_NAME = "buildragwithpython"

documents = load_data()

# Initialize the ChromaDB client with explicit settings

client = chromadb.HttpClient(host="localhost", port=8000)

# Get list for collection names

collection_names = client.list_collections()

# If the collection already exists, delete it

if COLLECTION_NAME in collection_names:

print("deleting collection")

client.delete_collection(COLLECTION_NAME)

collection = client.get_or_create_collection(

name=COLLECTION_NAME, metadata={"hnsw:space": "cosine"}

)

starttime = time.time()

# Iterate through the documents

for doc in documents:

content = doc.page_content

texts = text_splitter.create_documents([content])

# Adding source metadata to each chunk with unique IDs

for i, text in enumerate(texts):

text.metadata["source"] = doc.metadata.get("source", "default_source")

DOC_ID = str(uuid.uuid4())

# Generate embedding using Ollama directly

response = ollama.embeddings(model="nomic-embed-text", prompt=text.page_content)

embedding = response["embedding"]

collection.add(

documents=[text.page_content],

metadatas=[{"source": text.metadata["source"], "chunk_id": i}],

ids=[DOC_ID],

embeddings=[embedding],

)

print(f"--- {time.time() - starttime:.6f} seconds ---")

Build Query Tool

I guess I need to work on this but here to tie the two up. I am starting to like Jupyter Books as a way to prototype.

import sys

import chromadb

import ollama

from utilities import getconfig

embedmodel = getconfig()["embedmodel"]

mainmodel = getconfig()["mainmodel"]

chroma = chromadb.HttpClient(host="localhost", port=8000)

collection = chroma.get_or_create_collection("buildragwithpython")

query = " ".join(sys.argv[1:])

queryembed = ollama.embeddings(model=embedmodel, prompt=query)['embedding']

if not queryembed:

print("Error: No embedding returned")

sys.exit(1)

relevantdocs = collection.query(query_embeddings=[queryembed], n_results=5)["documents"][0]

DOCS = "\n\n".join(relevantdocs)

MODEL_QUERY = f"{query} - Answer that question using the following text as a resource: {DOCS}"

stream = ollama.generate(model=mainmodel, prompt=MODEL_QUERY, stream=True)

for chunk in stream:

if chunk["response"]:

print(chunk['response'], end='', flush=True)